Configuring Ollama and Continue VS Code Extension for Local Coding Assistant

🔗 Links

Prerequisites

- Ollama installed on your system. You can visit Ollama and download application as per your system.

- AI model that we will be using here is Codellama. You can use your prefered model. Code Llama is a model for generating and discussing code, built on top of Llama 2. Code Llama supports many of the most popular programming languages including Python, C++, Java, PHP, Typescript (Javascript), C#, Bash and more. If not installed, you can install wiith following command:

ollama pull codellama

You can also install Starcoder 2 3B for code autocomplete by running:

ollama pull starcoder2:3b

NOTE: It’s crucial to choose models that are compatible with your system to ensure smooth operation and avoid any hiccups.

Installing Continue and configuring

You can install Continue from here in VS Code store.

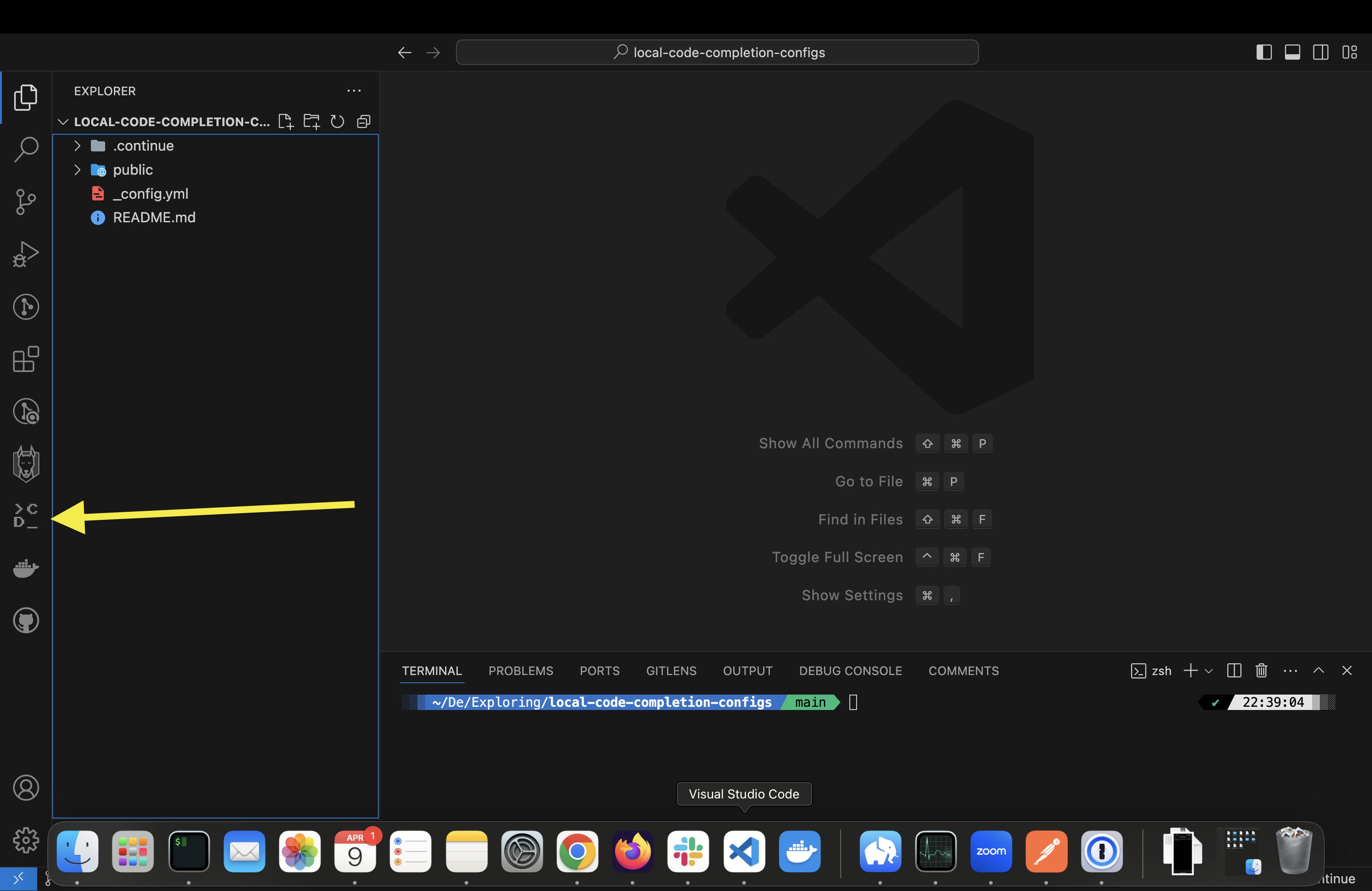

After installation, you should see it in sidebar as shown below:

Configuring Continue to use local model

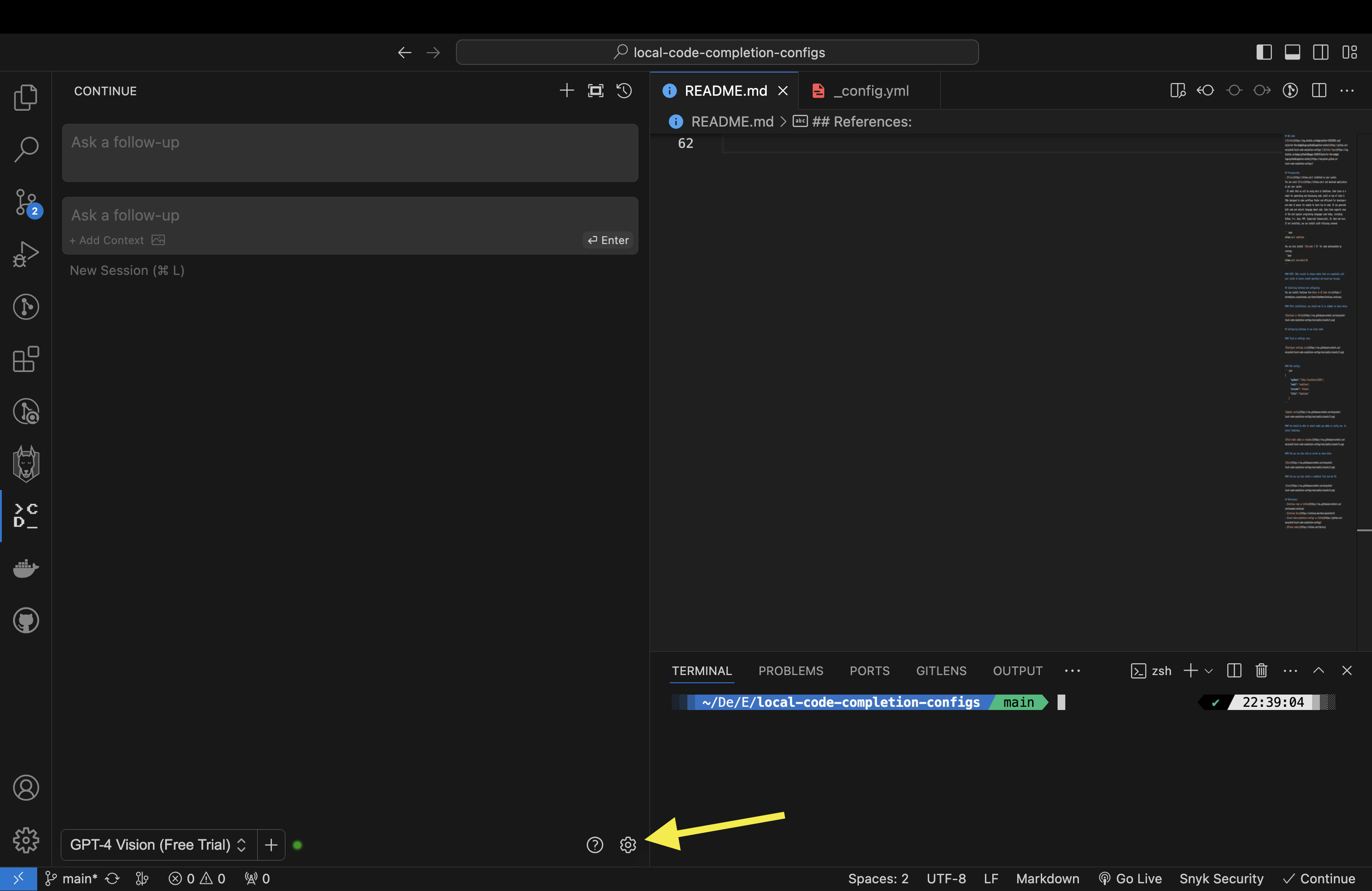

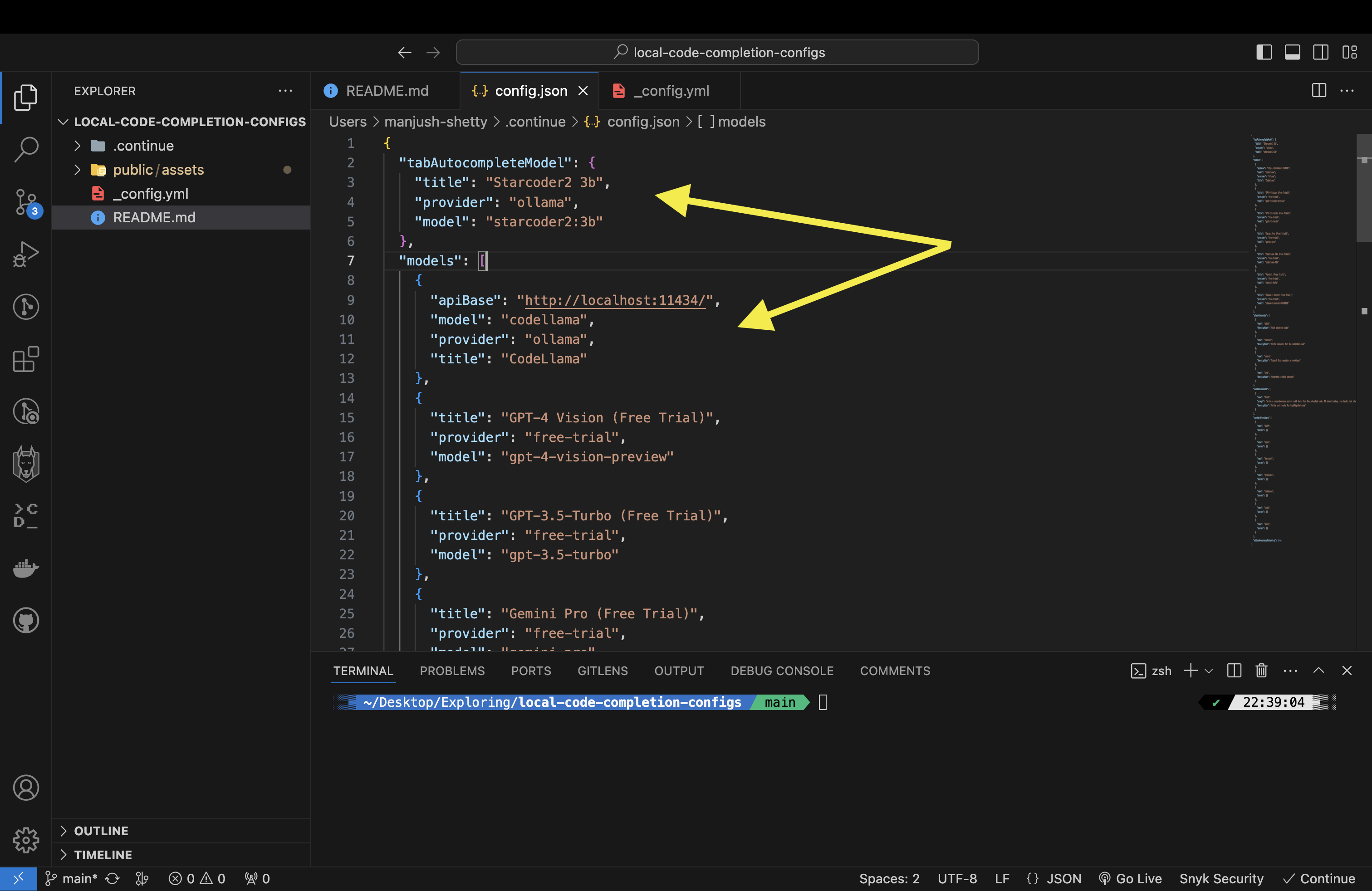

Click on settings icon. It will open a config.json in your editor

Add configs:

{

"apiBase": "http://localhost:11434/",

"model": "codellama",

"provider": "ollama",

"title": "CodeLlama"

}

and also add tabAutocompleteModel to config

"tabAutocompleteModel": {

"apiBase": "http://localhost:11434/",

"title": "Starcoder2 3b",

"provider": "ollama",

"model": "starcoder2:3b"

}

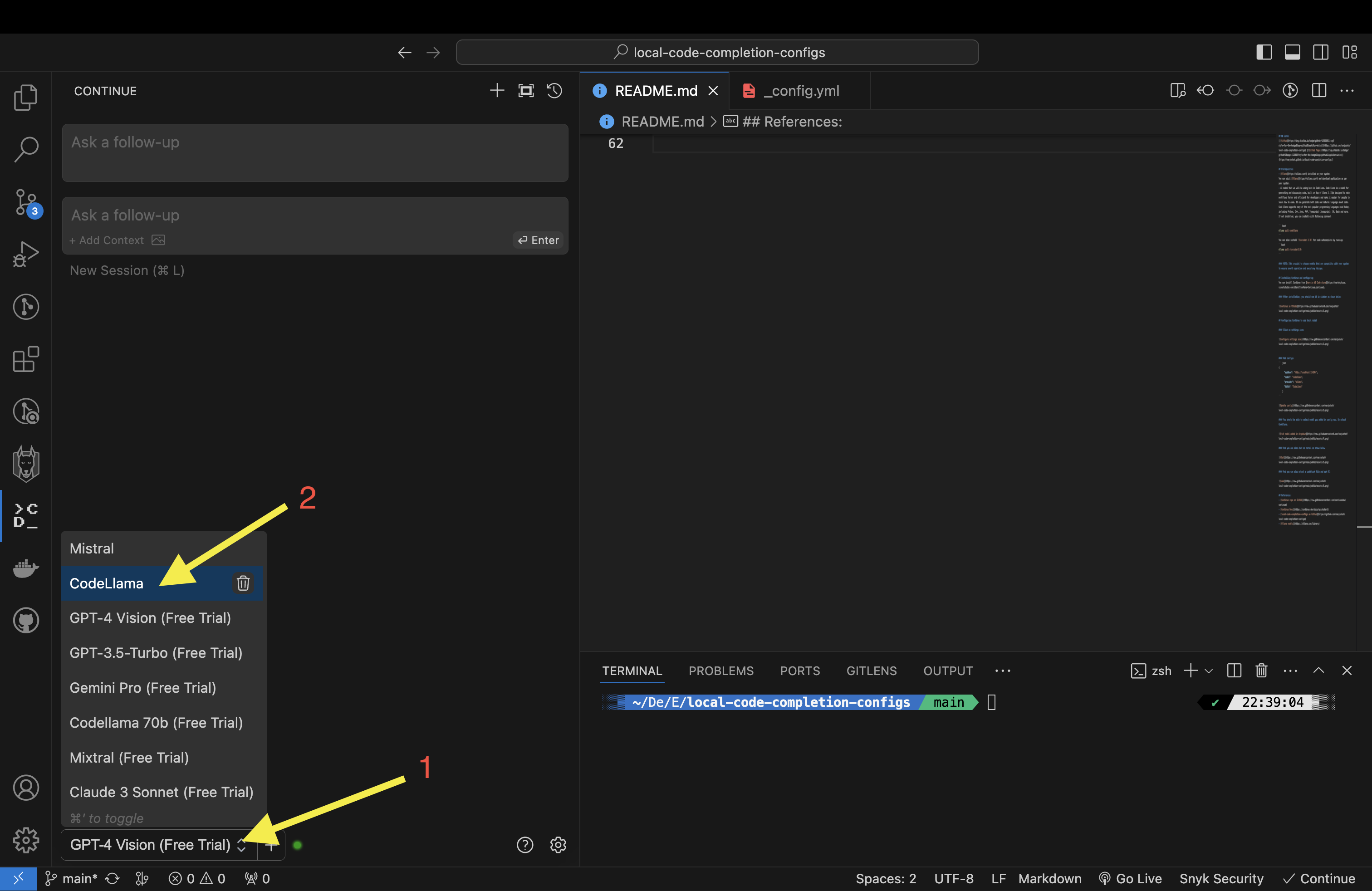

Select CodeLlama, which would be visible in dropdown once you add it in config

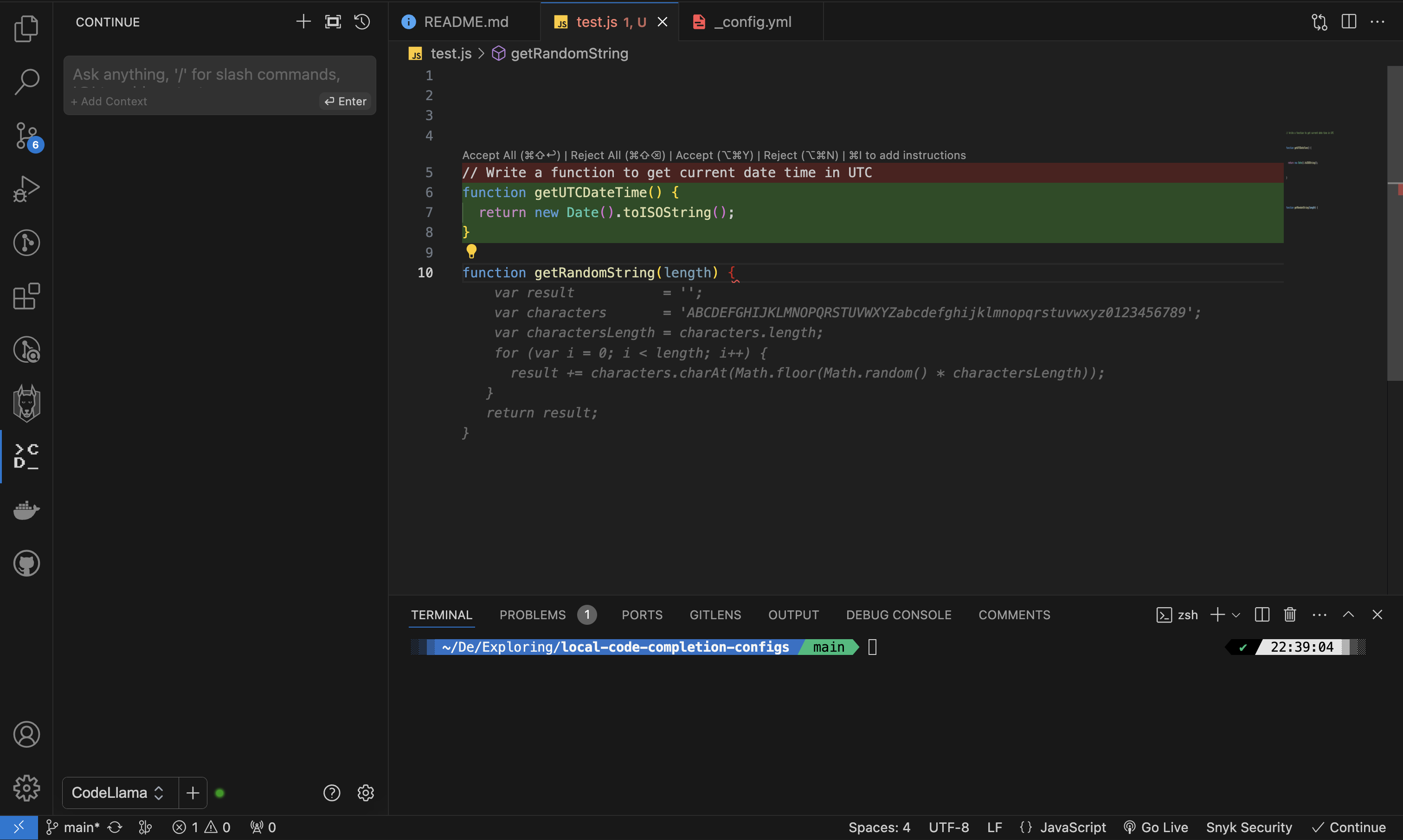

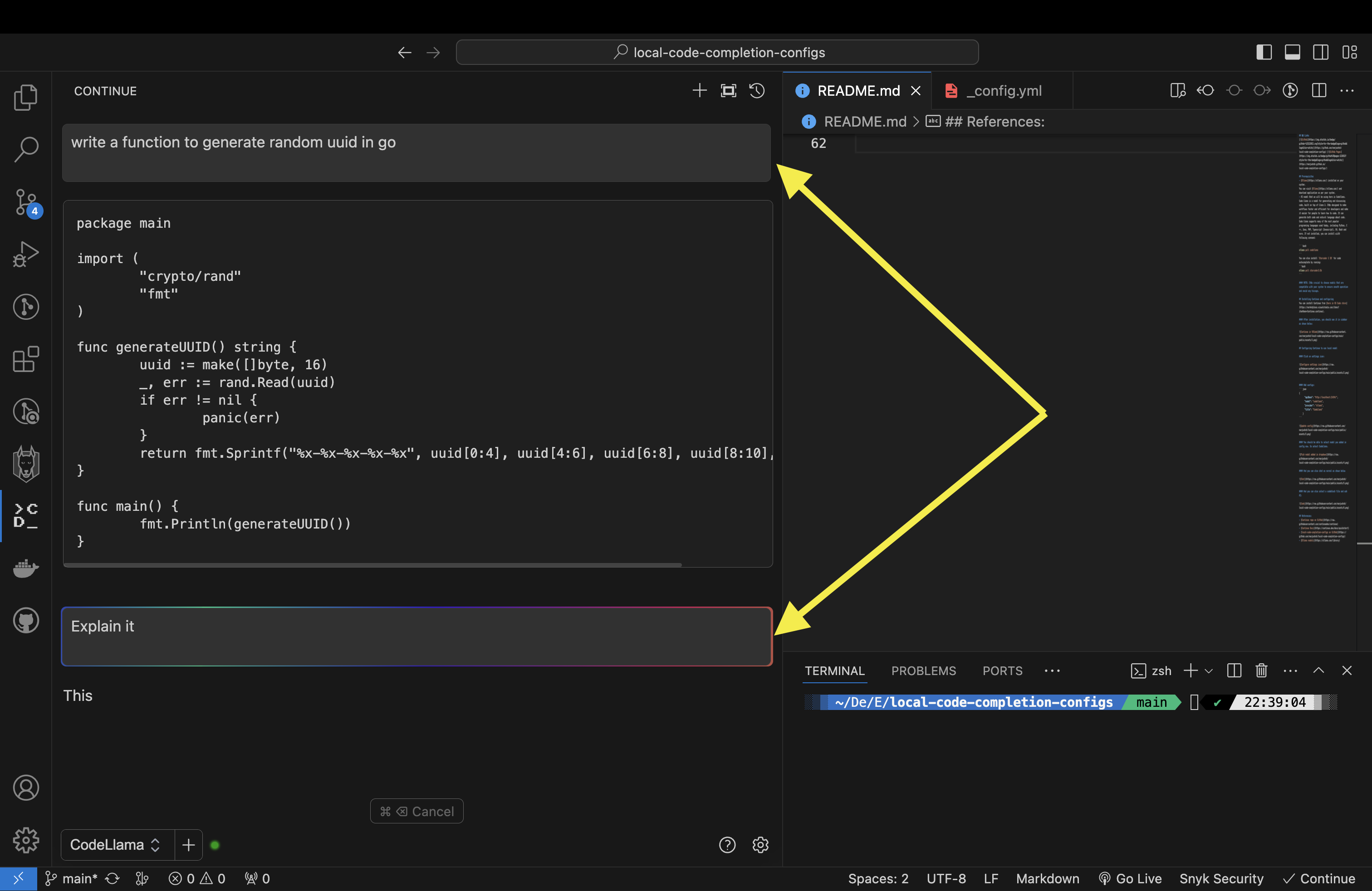

Once model is configured, you should be able to ask queastions to the model in chat window

And you can also select a codeblock file and ask AI similar to copilot: